AI and the Enterprise

Corporate AI projects are not yet driving expected productivity gains, but don't read too much into it - yet.

As you may know, I work at a large-ish company, and I work in the group that builds IT training and certifications (it wouldn't be hard to find out who, but it won't surprise you).

We are going all in on AI, and I will admit that for certain tasks, generative AI is helpful. Of course, we have our own deployments blessed by infosec, and RAG'd with our data for our use. (no, we don't get to use that to build training, confidential information is a no-no in public facing training.)

The last year has been a heady one of anticipation of the rise of Agentic AI, and a bevy of agents that will automate large swaths of the day-to-day drudgery. So far, I have not seen any that actually work, and in fact our only approved agent is one that I can use as a manager to bypass the homeless-abortion that is Workday. Yay I guess.

Anyhow, as I said, I use it pretty frequently, but I have to keep it in perspective. It is a tool. Like Jira, or Trello, or Miro, or Lucid, or Figma (yada yada yada) is a tool.

Regardless, best estimates are that in 2024 $294B was spent to build out infrastructure (Data centers, mountains of GPU's, and lots and lots of switching hardware to shuffle data around these data centers), on a par with consumer spending in the US.

Massive amounts of money sloshing around.

Part of this build out was to support enterprise deployments of AI, and executives got excited about AI being a productivity enhancer akin to the PC and IT waves that began in the 80's and accelerated with the rise of the internet.

That said, recently there have been some hiccups.

First, OpenAI's launch of ChatGPT5 that was largely panned as "meh", and not living up to the hype. Even CEO Sam Altman is conceding that simple scaling is not delivering quantum leaps in performance (and it still can't count the 'b's in the word "blueberry" (it says that 3 b's are in blueberry))

Second, Meta's AI buddies are leading to psychological issues in users, as people cocoon themselves even more in their fantasy land (and the super creepy xAI's Anime "sexified" girlfriend[1]). As well as people using ChatGPT to replace flesh and blood therapists, leading to some pretty serious mental health issues as chatbots are programmed to be obsequious and submissive/supportive.

AI Coding agents, a reasonably useful use case where Cursor is dominant, but they are built on ChatGPT, Claude, and Gemini, and as was seen in the hijinks of Anthropic/cursor pricing (Ed Zitron does a deep dive here:

Read the section titled "Cursor's Cursed Price Changes" for details)

Anyhow, that is not what I came here to write about. As I mentioned above, a lot of enterprises have invested in AI projects to not miss out on the hype (EFOMO)

How are Enterprise Projects and Deployments going?

MIT recently dropped a bombshell report, saying that 95% of corporate AI programs are not merely non-performing, but failing:

From the article (you can get a link to the report, but you have to submit your info)

“Some large companies’ pilots and younger startups are really excelling with generative AI,” Challapally said. Startups led by 19- or 20-year-olds, for example, “have seen revenues jump from zero to $20 million in a year,” he said. “It’s because they pick one pain point, execute well, and partner smartly with companies who use their tools,” he added.

But for 95% of companies in the dataset, generative AI implementation is falling short. The core issue? Not the quality of the AI models, but the “learning gap” for both tools and organizations. While executives often blame regulation or model performance, MIT’s research points to flawed enterprise integration. Generic tools like ChatGPT excel for individuals because of their flexibility, but they stall in enterprise use since they don’t learn from or adapt to workflows, Challapally explained. (emphasis mine)

This is the conundrum. Executives get access to ChatGPT, and it is great for them. They are story tellers, and that is where ChatGPT (or claude, or gemini, or any of them really) excel. But taking that consumer facing tech and making it safe for use with corporate data is a headache (data leakage, there's a story I could tell you, but I can't) and when you do erect proper safeguards, the tools become less useful.

I will not pretend that there aren't use cases that they are great for, even if the people who have to interface with customer support bots hate it and yes, they can tell they aren't chatting with a real person.

But the be-all, replace the flesh and blood en masse? Not yet.

Ok, the article is a bit clickbait-y, but it does report that the expectations of success have been tempered, and that the payback isn't as rapid as leaders anticipated, and that the technology isn't as robust as it seemed it was 6/9/12 months ago.

Still, the article then begins to sound like a sales person for the bespoke vendors:

How companies adopt AI is crucial. Purchasing AI tools from specialized vendors and building partnerships succeed about 67% of the time, while internal builds succeed only one-third as often.

This finding is particularly relevant in financial services and other highly regulated sectors, where many firms are building their own proprietary generative AI systems in 2025. Yet, MIT’s research suggests companies see far more failures when going solo.

Companies surveyed were often hesitant to share failure rates, Challapally noted. “Almost everywhere we went, enterprises were trying to build their own tool,” he said, but the data showed purchased solutions delivered more reliable results.

Uh, sure, rolling your own (aka NIH) is not a good idea, but from what I've read these vendors are building on top of the public tools, adding some secret sauce (caveat: I am currently reviewing AI content creation tools to automate and streamline the building of training) and then reselling it.

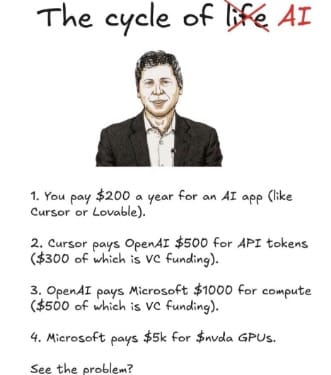

Yet, none of the underlying companies are profitable. The last estimate I read on the cashflow of OpenAI is that every dollar of revenue costs $2.35 to deliver the services. That yes, they have 700M monthly users, but a small fraction pay, and enterprises are the largest fraction of their revenue, but with each generation model, the cost per input/output token continues to rise.

This is feeling a lot like Uber in the 2010's, where VC's and Sovereign funds were footing the buildout and the decimation of the entrenched taxi cartels before flipping the switch and now it costs more to take an Uber than it used to cost with a yellow cab.

One day, the investors will demand a ROI, and that will lead to costs going up

and people will begin to realize that using ChatGPT to help them write an email, or draft a quick blog post, or do some copywriting for an advertisement will not be free but will cost a couple hundred bucks a month. Truth is, we don't yet know how much it costs to deliver a result from a query.

That will be a rude awakening, and it will be interesting to study how that reality will hit. I look forward to Ed Zitron's deconstruction of that event horizon.

For now, I will continue to use the free versions.

1 - eww